|

I am a Research Scientist @ Meta. My research interests include computer vision and graphics for the problems of 3D reconstruction and image animation. I received my Ph.D. from Skoltech, under the supervision of Victor Lempitsky. During my Ph.D., I also was a Lead Research Scientist at Samsung AI Center. After that, I did a Postdoc at ETH Zurich AIT lab |

|

|

Representative papers are highlighted. |

|

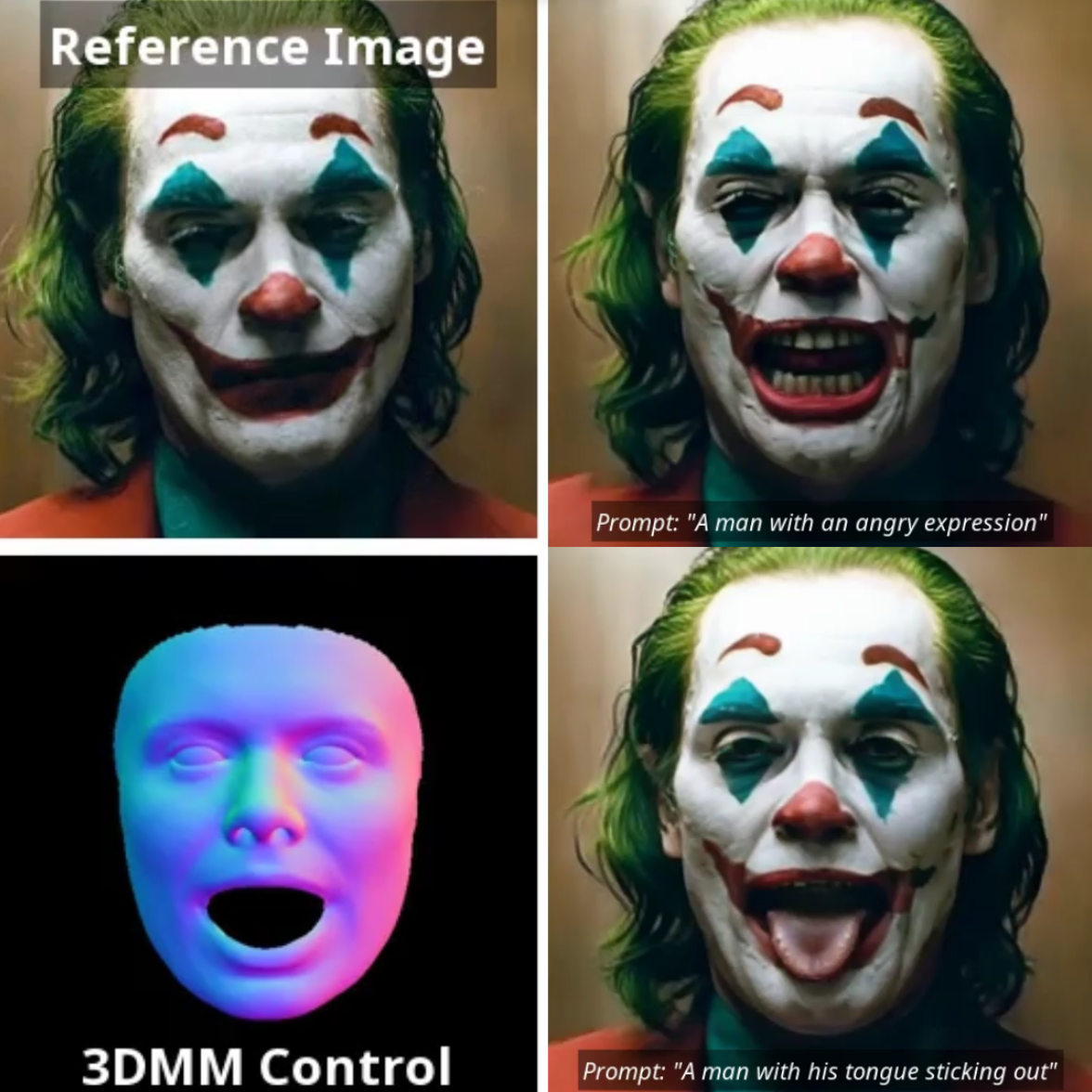

Malte Prinzler, Egor Zakharov, Vanessa Sklyarova, Berna Kabadayi, Justus Thies International Conference on 3D Vision (3DV), 2025 project page / video / arXiv Conditional synthesis of 3D human heads with extreme expressions. |

|

Phong Tran, Egor Zakharov, Long Nhat Ho, Liwen Hu, Adilbek Karmanov, Aviral Agarwal, McLean Goldwhite, Ariana Bermudez Venegas, Anh Tuan Tran, Hao Li ACM Transactions on Graphics (SIGGRAPH Asia), 2024 project page / video / arXiv Highly expressive and animatable 3D human head avatars for VR telepresence. |

|

Egor Zakharov, Vanessa Sklyarova, Michael J. Black, Giljoo Nam Justus Thies Otmar Hilliges ECCV, 2024 project page / video / arXiv Reconstruction of highly personalized and realistically rendered strand-based hairstyles from a monocular video. |

|

Phong Tran, Egor Zakharov, Long Nhat Ho, Anh Tuan Tran, Liwen Hu, Hao Li CVPR, 2024 project page / video / arXiv Instant and animatable 3D human head avatars from a single image with disentangled expression reenactment. |

|

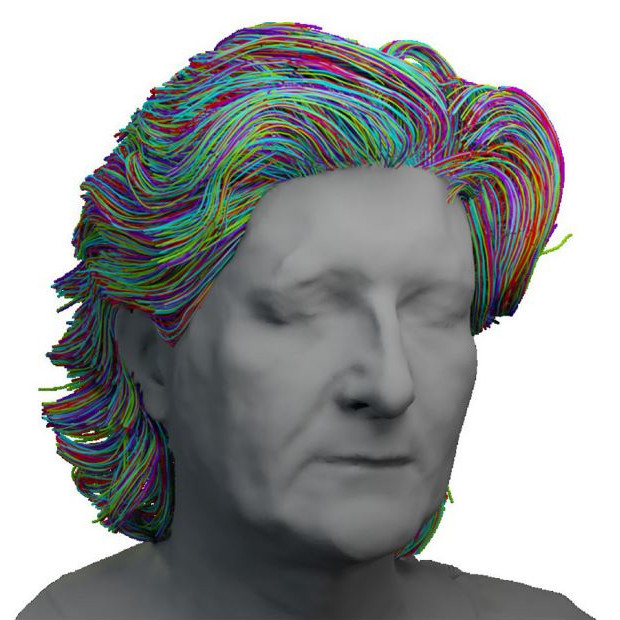

Vanessa Sklyarova, Egor Zakharov, Otmar Hilliges, Michael J. Black, Justus Thies CVPR, 2024 project page / video / arXiv Text-guided diffusion model for strand-based hairstyles generation & editing. |

|

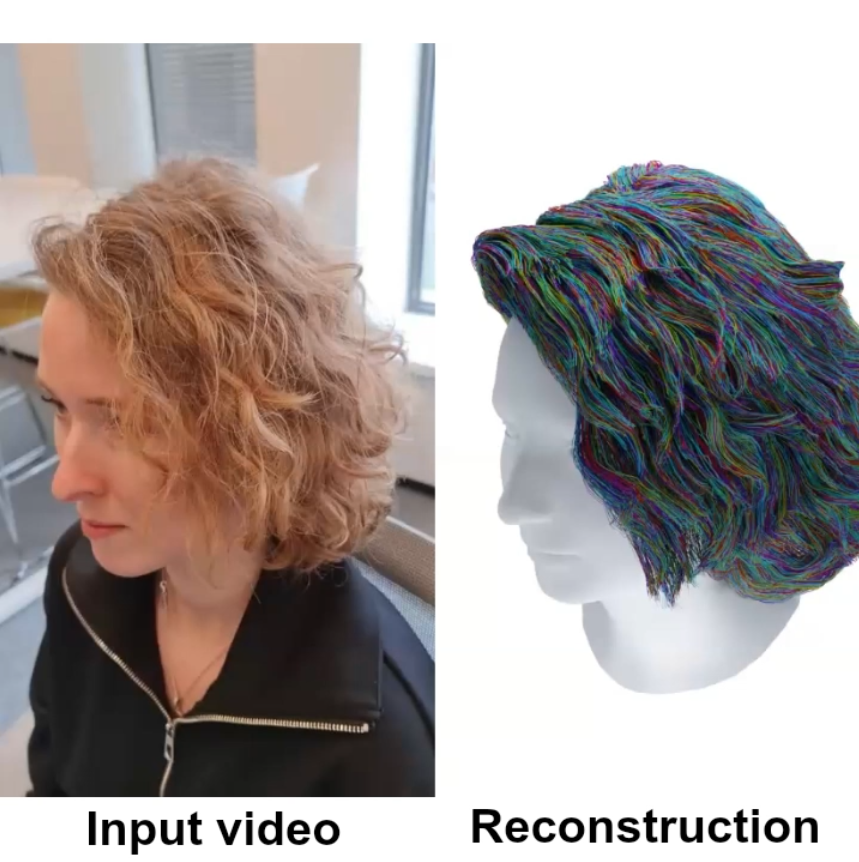

Vanessa Sklyarova, Jenya Chelishev, Igor Medvedev, Andreea Dogaru, Victor Lempitsky, Egor Zakharov ICCV, 2023 (Oral Presentation) top 1.8% of the submissions project page / video / arXiv Reconstructing personalized and realistic hairstyles in the form of 3D strands from a smartphone video. |

|

Andreea Dogaru, Andrei-Timotei Ardelean, Savva Ignatyev, Egor Zakharov, Evgeny Burnaev CVPR, 2023 project page / arXiv We improve existing surface reconstruction methods using a plug-and-play hybrid representation, achieving up-to 18% improvement in reconstruction quality and approx. 2x faster convergence speed. |

|

Taras Khakhulin, Vanessa Sklyarova, Victor Lempitsky, Egor Zakharov ECCV, 2022 project page / arXiv We create animatable 3D head reconstructions in the form of a rigged mesh from a single image by leveraging facial priors and differentiable rendering of in-the-wild video data. |

|

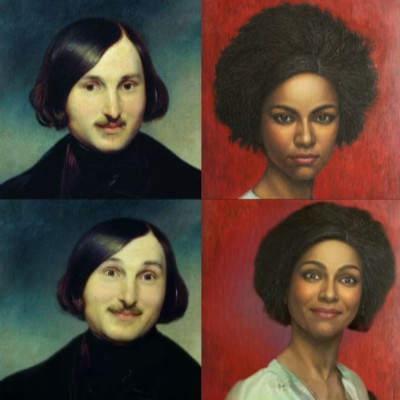

Nikita Drobyshev, Jenya Chelishev, Taras Khakhulin, Aleksei Ivakhnenko, Victor Lempitsky, Egor Zakharov ACM MM, 2022 project page / arXiv Learning disentangled expression and appearance representations for high-resolution portrait animation. |

|

Egor Zakharov, Aleksei Ivakhnenko, Aliaksandra Shysheya, Victor Lempitsky ECCV, 2020 project page / video / arXiv Speeding-up the inference of neural head avatars using an efficient architecture that achieves real-time performance on mobile. |

|

Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, Victor Lempitsky ICCV, 2019 (Oral Presentation) top 4.6% of the submissions video / arXiv Leveraging a large corpus of weakly annotated video data to achieve the realistic synthesis of human heads in a few-shot scenario using meta-learning and GAN-based training. |

|

|

Aliaksandra Shysheya, Egor Zakharov, Kara-Ali Aliev, Renat Bashirov, Egor Burkov, Karim Iskakov, Aleksei Ivakhnenko, Yury Malkov, Igor Pasechnik, Dmitry Ulyanov, Alexander Vakhitov, Victor Lempitsky CVPR, 2019 (Oral Presentation) top 5.6% of the submissions project page / arXiv We integrated classical and neural rendering approaches to achieve high robustness of the avatar renders to the poses and views unseen during training. |

|

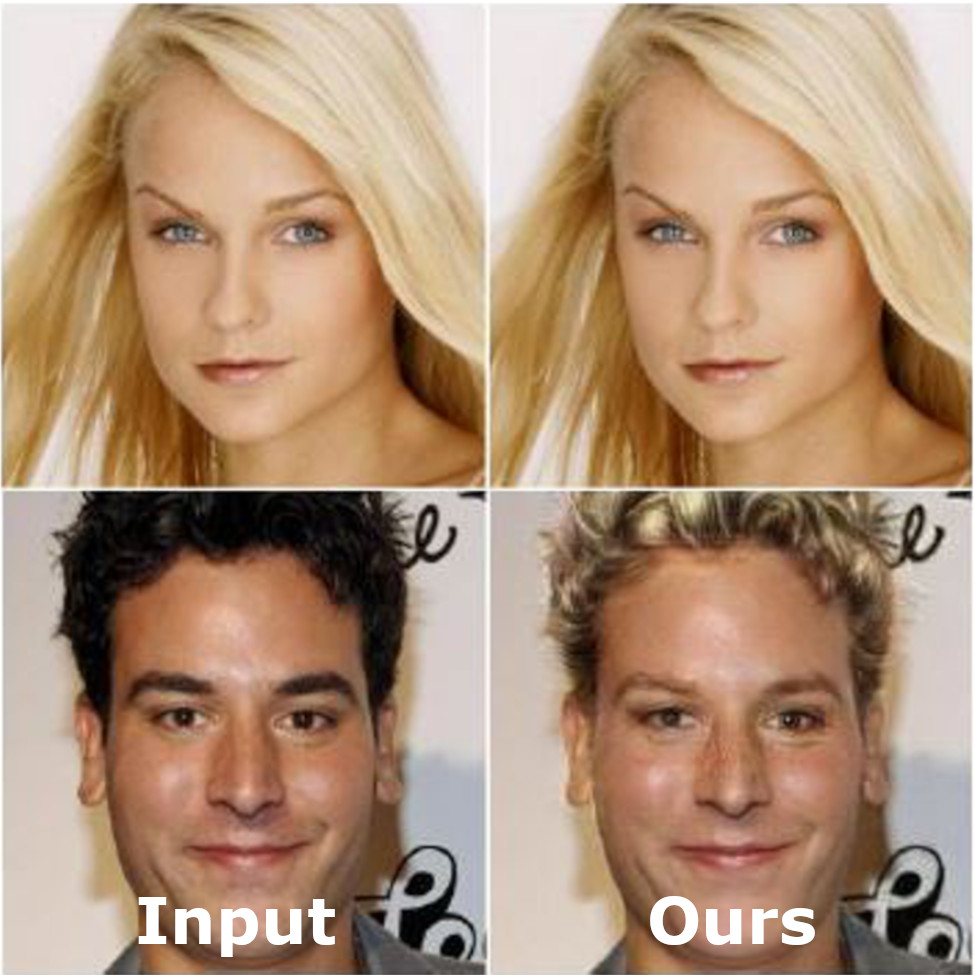

Diana Sungatullina, Egor Zakharov, Dmitry Ulyanov, Victor Lempitsky ECCV, 2018 project page / arXiv We combine the GAN-based and perceptual losses in a single training method, which primarily excels at the task of facial attribute manipulation. |

|

This website uses a template by Jon Barron |